Crypto Agility – Buzzword Du Jour or Roadmap to Success

(Sponsored:Quantum Xchange) There has always been a crypto arms race to secure data against advances in computing and the creativity of attackers. Over the years, many cryptographic algorithms have been rendered insecure by the advances in mathematics, computing power, and the increasing sophistication and creative exploits of hackers. But despite this history, and the importance of cryptography in the security stack, it is decidedly not agile, and remains one of the most intractable, least modifiable components of our collective infrastructure.

Each application and device that encrypts data has cryptography embedded within it. Because of this, the ability to change the way encryption is employed, especially the algorithms, is not an easy task – if it is possible at all. Forward-looking organizations have started to create Crypto Agility Teams to help identify and record what encryption is used and where. Despite the discernment of some, it’s probably safe to say that few organizations, if any, have a full catalog of the various encryption modules, algorithms, or even key lengths used in the applications and devices that secure the enterprise.

The Holy Grail for Adversaries

Advancements in computers, especially the quantum, probabilistic, and massively parallel varieties, have convinced most cryptography experts that it’s just a matter of time before certain aspects of encryption will be broken. Especially vulnerable is the area of asymmetric public key encryption (PKE) used to secure most of our data networks.

Symmetric encryption like AES is still considered safe from advanced computing but may require longer keys. It should be noted however, that symmetric keys are distributed primarily via PKE. Breaking PKE would enable an adversary to not only steal data but also to act as an undetectable man-in-the-middle (MITM) on networks, reading or even changing data at will.

This makes breaking PKE the Holy Grail for any adversary, and hence tremendous resources are being poured into research to break it. Unlike predictable events of the past with an exact end date (think Y2K), there will be no warning when this day comes. No one will announce when they have broken PKE – if it hasn’t happened already.

Changing to newer encryption methods that protect against known and yet-to-be-discovered threats is not an easy task. Simply swapping one algorithm for another (hopefully) safer one seems simple, but it may not be the best option available today because modern networks are entirely different animals from the days when PKE was invented.

A Brief History of Public Encryption

PKE enabled the modern Internet, which now consists of billions of devices and many billions of network connections. Right now, it’s likely that your mobile phone has concurrent network connections to several different data and service providers such as email, chat, news, social media, weather, media streaming services, etc. But PKE methods such as Diffie-Hellman and RSA were envisioned in the 1970s, back when networks were point-to-point between a single pair of devices. Consequences of this now archaic architecture is that it assumed all communications – the key exchange and the data protected by that key – had to be sent over the lone connection and that devices themselves generate the keys. That creates a “middle” to exploit, and we now know that insufficient entropy on a device can lead to deterministic keys. While not an issue in the 1970s, modern computers and algorithms make low-entropy key generation a real threat and adding entropy to legacy devices is rarely possible. Unfortunately, when PKE was created a separate method and channel for key generation and distribution wasn’t the option it is today.

The Middle Remains a Challenge

Now, nearly 50 years later, PKE architecture is intrenched in our software applications and devices. NIST is currently evaluating Post-Quantum Cryptographic (PQC) algorithms, to replace the quantum-vulnerable ones used in current PKE. But these new algorithms are only believed to be safe from known attacks. If history is any guide, unforeseen attacks will crack some implementations.

Algorithm replacement, where possible, does not guarantee security. If a new algorithm is broken, data will be exposed.

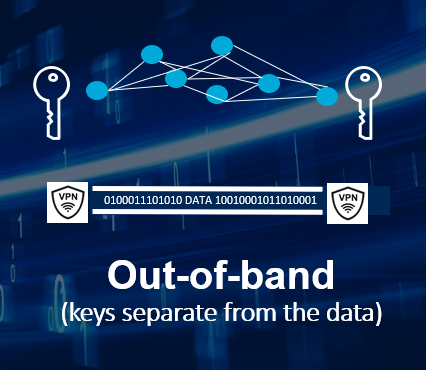

Imagine the cost and effort of replacing algorithms across the enterprise, only to find out that it needs to be done again in a panic! Notably, in all the proposed PQC candidate algorithms, the single-session key and data exchange architecture remains. If there is a single session for both keys and data, there will still be a middle to exploit – this is the real weakness of modern PKE.

Key Delivery Reimagined & True Crypto Agility Achieved

Imagine for a moment a new data security architecture where key generation and distribution are separate from data encryption and transmission. Keys are delivered via a service network that has no relation to the path the data takes. Data encryption still uses the quantum-safe symmetric algorithms that are embedded everywhere, and still delivers point-to-point between the parties exchanging data.

Removing the key from the data path enables high-entropy key generation, and a new data security architecture with several benefits, including:

- Out-of-band key delivery could eliminate MITM attacks altogether because there is no middle anymore. An attacker has at least two networks to compromise, and by diversifying the path that the keys take this could be many networks.

- Secondly, such an architecture would provide PKE systems with defense-in-depth countermeasures they currently lack because it facilitates the use of multiple keys. This means that the breach of an algorithm securing the key exchange will not expose the data.

- Last, and perhaps most important, it provides true crypto agility by enabling rapid adoption of new key exchange methods without network downtime or interrupting the underlying infrastructure. Just as it’s easier to change password policies in a central credential repository than on a user list for every app, it’s far easier to implement new key exchange algorithms, or entirely new technologies, on a centralized infrastructure than it is on each endpoint.

As the Information Age of computing gives way to the Quantum Age, commercial businesses and government agencies around the world will need to prepare for the largest cryptographic transition in the history of computing. As more is learned about the power of super computers, we may even need to deploy entirely new technologies based on quantum physics, such as Quantum Key Distribution (QKD), to securely exchange keys.

It simply doesn’t make sense to spend billions on classical cyber protections that will be obsolete in a few years as hackers inevitably find their way around those safeguards instead of investing in a key distribution architecture for the ages. Separating key generation and distribution from the data is a great place to start.

About the Author

Eric Hay is the Director of Field Engineering at quantum-security startup Quantum Xchange. As one of the company’s first hires, he has been instrumental in the company’s efforts to commercialize quantum-safe encryption technologies including Quantum Key Distribution (QKD) and Post-Quantum Cryptography (PQC), guiding prospects, partners, and customers from decision making to deployment.