Inside Quantum Technology’s Inside Scoop: Quantum and Deepfake Technology

Thanks to advances in technology, it is becoming harder to tell what is real and what isn’t. This problem is worsened with the use of deepfake technology—audio, and videos that use AI to replace individuals or their voices. While many deepfakes have been used successfully for entertainment (such as if Nicholas Cage was in the Raiders of the Lost Ark) or gaming (such as in FIFA athletes), a large percentage of them have been created for more sinister reasons. As it becomes easier to create these doctored videos, many experts are hoping that quantum computing can help to overcome the potential threats of this rising technology.

How Does Deepfake Technology Work?

To create a successful deepfake video, you need machine learning algorithms. “Deep learning algorithms teach themselves how to solve problems from large data sets, and then are used to swap faces across video and other digital content,” explained Post-Quantum CEO Andersen Cheng. Post-Quantum is a leading cybersecurity company whose focus is on quantum-resistant security, including against deepfakes. “There are a number of methods for creating these deepfakes,” Cheng stated, “but the most popular is using deep neural networks involving autoencoders. An autoencoder is a deep learning AI program that studies video clips to understand what a person looks like from multiple angles and the surrounding environment, and then it maps that person onto the individual by finding common features.”

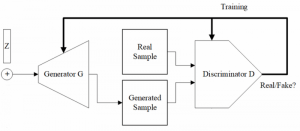

A deepfake technology set up (PC Wikimedia Commons)

To ensure the autoencoder works successfully, multiple video clips of the subject’s face need to be analyzed to give a larger pool of data. Then the autoencoder can help create a composite video by swapping the original individual with the new subject. A second type of machine learning called a General Adversarial Network (GANs) will detect and improve flaws in the new composite video. According to a 2022 article: “GANs train a ‘generator’ to create new images from the latent representation of the source image and a ‘discriminator’ to evaluate the realism of the generated materials.” This process happens several times until the discriminator cannot tell if the video is doctored and the deepfake is complete.

The Threat of Deepfake Technology

Currently, there are many open-sourced software or free apps that individuals can use to create deepfakes. While this may seem beneficial for many, especially those in the entertainment industry, it has led to some serious, even criminal, problems. According to a Deeptrace report, 96% of the deepfake videos online in 2019 were, not surprisingly, pornography. While many of these illicit videos were made for revenge on an ex, others were used to create scandals for female celebrities and even politicians. In 2018, a deepfake video was released from a Belgian political party showing then-president Trump discussing the Paris Climate Accords. With fake news already becoming an issue for the general public, deepfake videos could be the straw that breaks the camel’s back. Even deepfake audio is wreaking havoc, as one doctored audio file from the CEO of a technology company helped to commit an act of fraud. For Cheng, these types of media can wear down public trust rather quickly. “We have the broader issue of societal trust-how will the public be able to discern between what’s real and what’s a deepfake,” Cheng added. “As we have seen, there is even evidence that deepfakes are being used to bypass protective measures such as biometric authentication.” With these growing concerns, Cheng and his team at Post-Quantum believe that they have a solution in the form of Nomidio, a specialized ultra-security software.

Preparing for DeepFake Technology Threats

Looking at the multiple threats posed by quantum computing and deepfakes, Cheng and this team created Nomidio to make sure login identities and even biometric authentication remain secure. “Nomidio is a biometric, passwordless multi-factor biometric (MFB) service that enables secure authentication with a simple and intuitive user experience,” Cheng said. “It replaces username/password based login and single sign-on, with users being authenticated against their biometric profile with multi-factor authentication (MFA) behind the scenes.” As Cheng has been an expert in cybersecurity for many years, he made sure Nomidio could also be secure against deepfakes. “Our core philosophy when creating it was to use as much additional input as possible and true multi-factor authentication (i.e. with more than two factors), so it is in fact the ideal solution for tackling any future development in deepfake technology. This is ultimately down to the fact that traditional MFA is insufficient, but MFB can make real-time attacks virtually impossible. That is, a combination of, for example, voice, face, and a PIN code is highly secure by the fact that any single factor may be possible to fake, but to fake all three in the same instance is virtually impossible. With Nomidio, a combination of voice and face biometrics, speech recognition, context-dependent data, and even behavioral analysis, can be combined into a single authentication system. ”

While Nomidio itself doesn’t leverage quantum computing to overcome deepfake threats, quantum computers could potentially work against these fake media files. As quantum computers often harness machine learning algorithms to work faster and more efficiently, they may be able to detect fake videos or audio files at a faster rate. While the technology is still being developed, and few are looking at deepfakes as a potential use case for quantum computers, these next-level machines could be used in the future to make our media more truthful and accurate.

With the threats of deepfake technology becoming more and more apparent, many governments and companies are already trying to find ways to help combat it. In 2021, Facebook launched the Deepfake Detection Challenge, with a $500,000 prize for those creating new technology to detect deepfakes. In the U.S., states like California, Texas, and Virginia have laws that ban deepfake usage for both pornography and politics. The European Parliament also established more regulations around deepfakes, modifying the Digital Services Act to impose using labels on deepfake videos. While this legislation won’t be effective until 2024, it does show the seriousness of the deepfake technology threat.

Kenna Hughes-Castleberry is a staff writer at Inside Quantum Technology and the Science Communicator at JILA (a partnership between the University of Colorado Boulder and NIST). Her writing beats include deep tech, the metaverse, and quantum technology.