(Forbes.com) Quantum computing is defined succinctly in the article as the next frontier of machines that think not in bytes but in powerful qubits. Scientists have been studying the theory of quantum computing for 30 years, and some say the first mainstream applications are just around the corner. Author briefly introduces small startups such as Scottish startup M Squared and larger companies with bigger resources are working on putting qubits on chips, too, including Microsoft, IBM and Google, as well as a host of secretive startups.

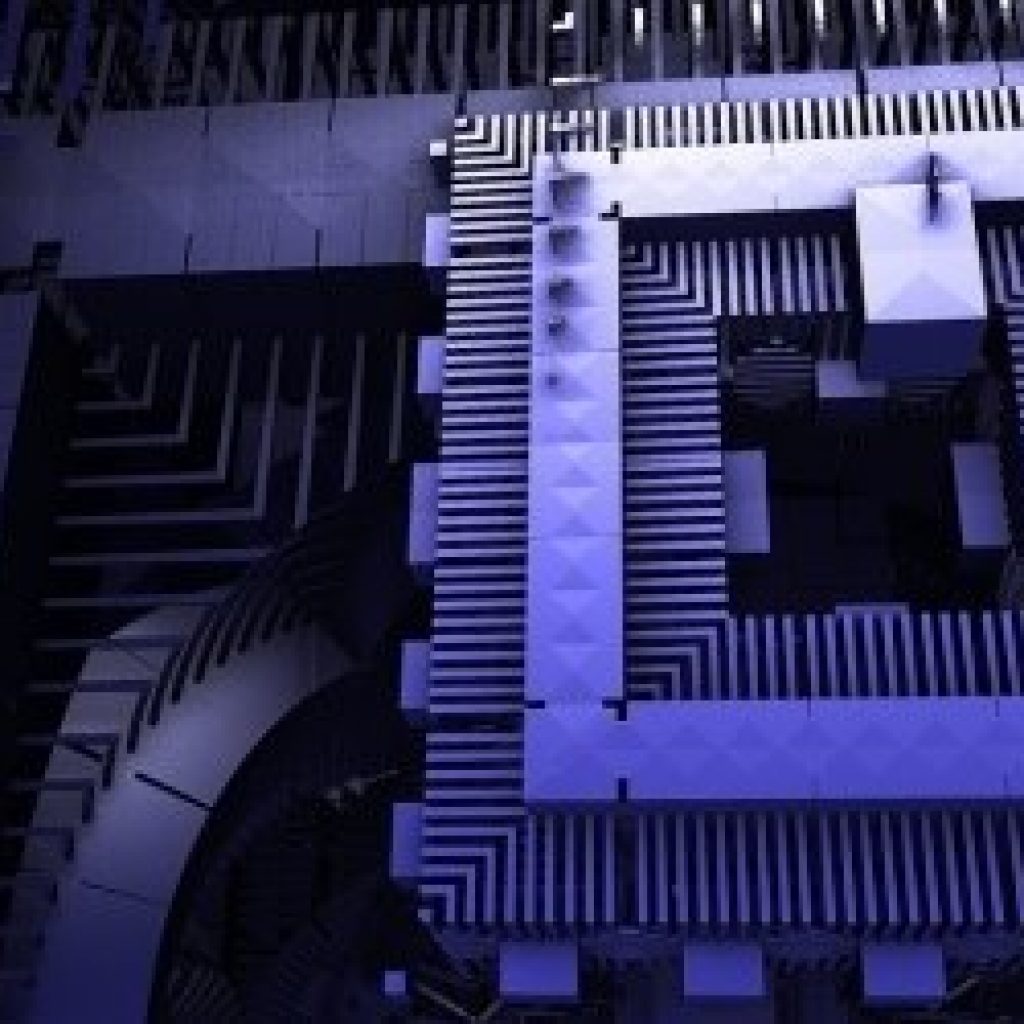

The two approaches to quantum computing are explained: 1) One pursued by Google and IBM is called superconducting. It involves making artificial atoms in cryogenic temperatures. 2) The other is called cold atoms or cold-ions, where engineers trap and cool real atoms. It’s a completely different approach, but potentially more efficient.

Closing discussion is of applications–with the conclusion that one of the first applications will probably be cryptography. Luckily, cryptologists are already working on encryption algorithms that are “quantum-proof.”

Two-Minute Guide to Quantum Computing