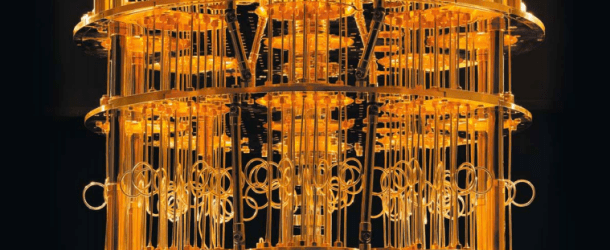

Google’s AI Researchers Build Machine Learning Algorithm to Model Unwanted Noise in Quantum Computers

(TheRegister.uk) AI researchers at Google have built a machine learning algorithm to model unwanted noise that can disrupt qubits in quantum computers.

Qubits have to be carefully controlled to get them to interact with one another in a quantum system. So Google engineers developed a reinforcement learning algorithm for something they call “quantum control optimization”.

“Our framework provides a reduction in the average quantum logic gate error of up to two orders-of-magnitude over standard stochastic gradient descent solutions and a significant decrease in gate time from optimal gate synthesis counterparts,” it said this week.

The algorithm’s goal is to predict the amount of error introduced in a quantum system based on the state its in and model how that error can be reduced in simulations. “Our results open a venue for wider applications in quantum simulation, quantum chemistry and quantum supremacy tests using near-term quantum devices,” Google concluded.